LLM Research Prompt: Replace Your Research Team

- AI

- September 10, 2025

- No Comments

AI just killed the research department. Say what you want — but the tools are here. If you pay people $3–6K/month to churn research memos, you can now get a CEO-grade briefing in minutes with the right prompt.

Below is the exact LLM research prompt I use to make any model act like an elite research analyst — plus how to run it, verify results, and avoid the obvious traps.

Use this LLM research prompt to make any large language model perform structured, executive-style research: paste the prompt, insert your topic, ask for 3–5 subtopics, request trends/data/examples, add sources, and finish with a 5-point “Smart Summary.”

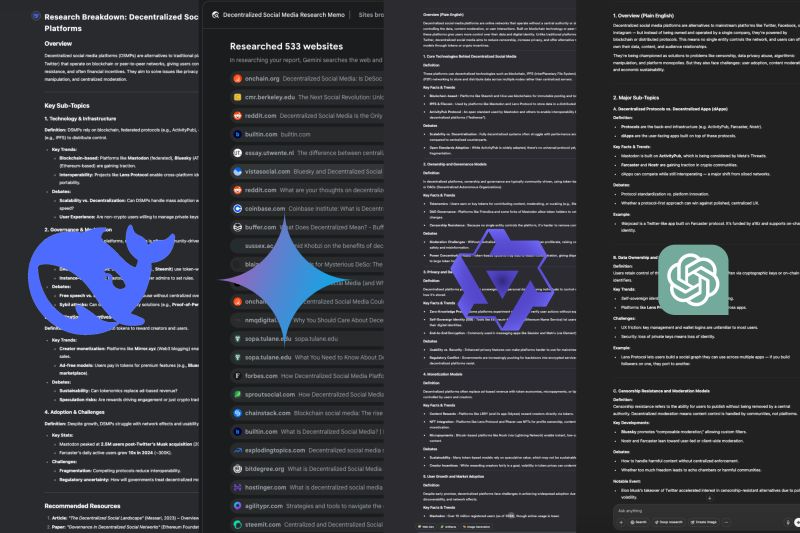

Source: Linkedin User

Why this prompt works

Role framing: telling the model to act like an elite research analyst sets expectations.

- Tight process: step-by-step instructions force structure — overview, components, evidence, resources, summary.

- Skimmable output: CEOs want short, actionable bullets. The prompt designs for that.

This mirrors how modern LLM research features run multi-step internet syntheses in minutes. (OpenAI)

The prompt — copy / paste / run

I want you to act as an elite research analyst with deep experience in synthesizing complex information into clear, concise insights.

Your task is to conduct a comprehensive research breakdown on the following topic:

[ Insert your topic here ]

1. Start with a brief, plain-English overview of the topic.

2. Break the topic into 3–5 major sub-topics or components.

3. For each sub-topic, provide:

– A short definition or explanation

– Key facts, trends, or recent developments

– Any major debates or differing perspectives

4. Include notable data, statistics, or real-world examples where relevant.

5. Recommend 3–5 high-quality resources for further reading (articles, papers, videos, or tools).

6. End with a “Smart Summary”.

Guidelines:

– Write in a clear, structured format

– Prioritize relevance, accuracy, and clarity

– Use formatting (headings, bullets) to make it skimmable and readable

How to run it (practical playbook)

- Paste the prompt into ChatGPT, Gemini, Claude, DeepSeek, Qwen, Mistral — any LLM.

- Insert topic exactly (e.g., “decentralized social media platforms,” “synthetic data governance,” or “2026 adtech trends”).

- Two-pass method:

- Pass 1 — Synthesis: run the prompt and get the memo.

- Pass 2 — Verify: ask the model to “find 5 sources (with links) that support each key fact” and flag anything speculative. Use the model’s function-calling or browsing features where available. (OpenAI)

- Pass 1 — Synthesis: run the prompt and get the memo.

- Cross-check: run the same prompt in a second model (different architecture) and compare contradictions — that’s your quality filter.

Models that scale this well: ChatGPT (with Deep Research / browsing), Elicit for academic literature, and agent frameworks that chain tools.

Example output structure (what you’ll get)

- Overview (2–3 sentences)

- 3–5 subtopics (each: definition, trends, debates)

- Data & examples (year + source when possible)

- 3–5 recommended resources (links)

- Smart Summary (5 bullets) — ready for a board deck

Limitations & guardrails (don’t skip these)

- Hallucinations: LLMs invent citations. Always verify links and dates.

- Bias & perspective: models reflect training data; ask for alternative viewpoints explicitly.

- Depth vs. speed: for frontier academic research you still need domain experts to evaluate methodology. Studies show LLMs assist literature reviews and synthesis well — but not without oversight.

Quick verification checklist

- Do 1–2 manual source checks (open the cited links).

- Ask the model: “Which points are low-confidence? Mark them.”

- Use a specialized tool (Elicit, Semantic Scholar) for academic pulls.

Where this saves money (and where it doesn’t)

- Saves: early scoping, trend spotting, competitive memos, investor one-pagers.

- Not a full replacement: experimental design, lab work, deep peer review, ethical sign-offs — humans still own those.

By 2030, generative AI could automate a notable share of work hours as organizations redesign workflows around AI. This makes LLM research assistants a practical productivity lever, not just a novelty. (McKinsey & Company)

Explore More:

- How to Master LinkedIn in 2025: My Exact Framework

- Full-Stack Marketers + AI: The Future of B2B Marketing

Conclusion

This one LLM research prompt is your fastest lever to turn any large model into a useful research analyst. Use the two-pass method, verify sources, and fold outputs into your workflow. Explore more AI tools on TheAISurf.

FAQs (Google PAA style)

Q: Can an LLM really replace a human researcher?

Not entirely. LLMs excel at scoping, synthesis, and first-draft memos — they’re fast and cheap. But humans must verify sources, judge methodology, and carry ethical responsibility. (arXiv)

Q: Which models should I try first?

Start with ChatGPT (Deep Research / browsing) and a second model like Claude or Gemini for cross-checking. Use Elicit for academic literature pulls. (OpenAI, teach.its.uiowa.edu)

Q: How do I stop the AI from hallucinating citations?

Ask for source links, then manually open 2–3 of them. Use function-calling where available and insist the model flag low-confidence claims.